Malicious use of manipulated visual and audio files — technology known as deepfakes — is swiftly migrating toward crime and influence operations, according to findings published Thursday.

Threat intelligence company Recorded Future pointed to a recent surge in such activities and a burgeoning underground marketplace that could spell trouble for individuals and companies that use tools like facial identification technology as part of multi-factor authentication. The report mirrors similar conclusions from an FBI alert last month warning that nation-backed hackers would themselves begin using deepfakes more frequently for cyber operations as well as misinformation and disinformation.

“We believe that threat actors have begun to advertise customized deepfake services that are directed at threat actors interested in bypassing security measures and to facilitate fraudulent activities, specifically fake voices and facial recognition,” the company’s Insikt Group wrote in a blog post.

Recorded Future’s work focuses more on that development in the criminal world.

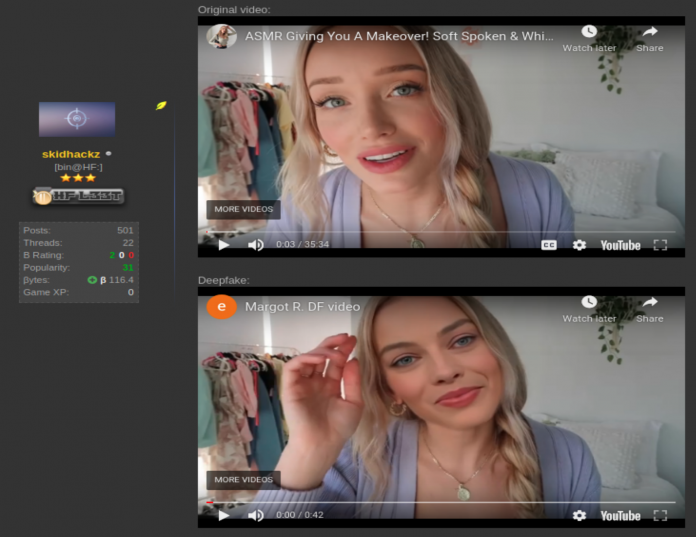

To date, most estimates say the use of deepfakes is almost entirely dedicated to pornography. For years now, the research company Sensity AI has concluded that between 90% and 95% of online deepfake videos are nonconsensual porn. A growing number of legislative proposals aim to curb such harassment, though it has been historically difficult for laws to keep pace with digital abuse.

Recorded Future pointed to incidents dating back as far as 2018, when scammers used a deepfake audio recording mimicking the voice of a U.K. energy executive to seek fraudulent wire transfers from company officials.

That coincides with a rise in advertisements from shady figures on the dark web for deepfake services that could be employed for criminal purposes, as well as solicitations for such services. One operator, for instance, “posted a request for deepfake services to construct and design fraudulent bank cards, signatures, documents, persons (images), and card numbers that are not detectable via Google or Yandex searches.”

The migration began to take off last summer, according to a threat researcher whom the company asked CyberScoop not to name due to the sensitivity of dark web research.

“You can see a shift from just the general curiosity of it to the fun and games of just changing faces on celebrities, and then a migration into pornographic material, but then around spring to summer of 2020 we see a shift of people beginning to advertise specific services,” the analyst said. The company saw threat actors “saying, ‘Hey I’ve been editing for this long. I’m familiar with certain security procedures that we’re seeing that are potentially coming down the pipe.’”

There are also signs of some criminal activity overlapping between the dark web and publicly available tools and forums, especially messaging platforms like Telegram and Discord, Recorded Future said. And with the hurdle lowering to get involved in deepfake technology, that could mean further criminality ahead, the analyst said.